As part of our ongoing exploration of how AI can support teaching and learning, we recently ran a small-scale trial using AI to generate feedback on scientific reports submitted by Chemistry students. This followed on from our earlier work with the Birmingham Award, where we used AI to provide consistent, structured feedback at scale.

In this trial, we set out to understand whether similar tools could help support the marking of technical, structured work — in this case, lab-based scientific reports — and what kind of quality, consistency, and time savings we might expect.

Why Explore This?

Marking scientific reports is a resource-intensive task. Even with clear rubrics and structured templates, reviewing work for scientific accuracy, clarity, and academic presentation can be time-consuming. With hundreds of reports to assess, we wanted to explore whether AI could help streamline the feedback process — while still providing value to students.

Method 1: Manual Text Extraction and Analysis

Our initial approach involved extracting text from PDF reports using Python-based tools, then analysing that extracted text using a large language model (LLM).

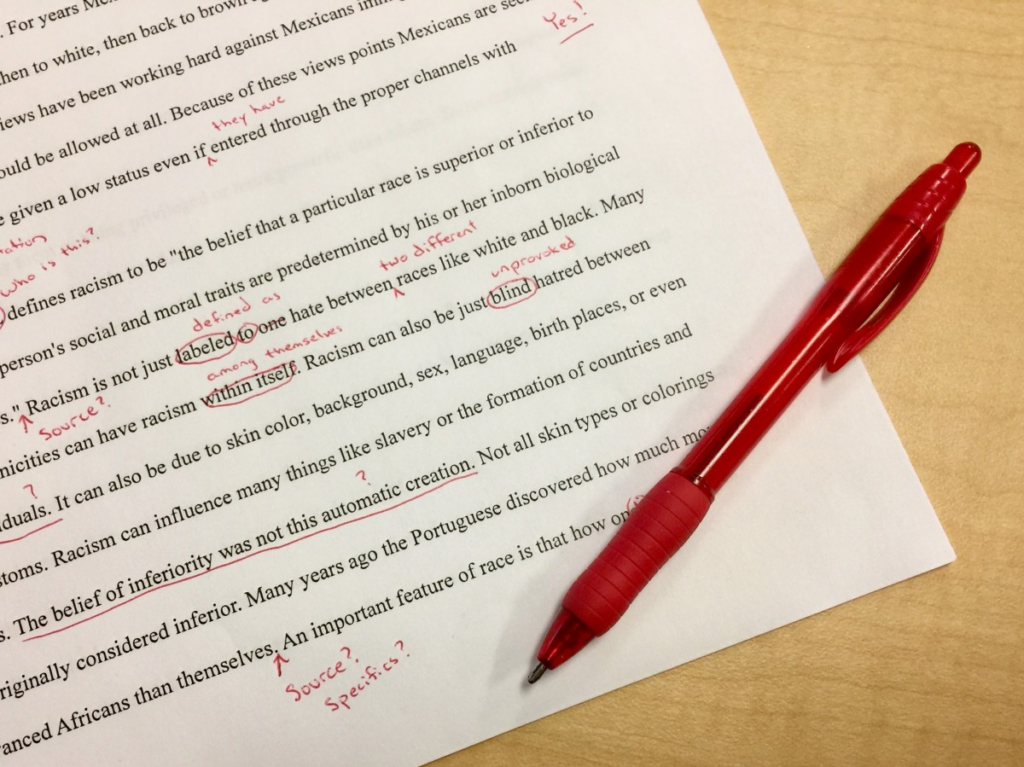

While technically straightforward, this method introduced several issues. The extracted text often included formatting problems — such as inconsistent spacing, loss of context around tables, figures, or captions, and misrepresentation of scientific notation. These issues affected the quality of the AI-generated feedback, particularly around structure and grammar.

In short, the lack of contextual information meant the AI struggled to interpret the reports in a meaningful way. The feedback generated in this phase was limited and often misleading.

Method 2: Using the OpenAI Assistants API

For the second approach, we used the OpenAI Assistants API to upload the original PDF files directly. This allowed the AI to manage the text extraction and interpretation itself, maintaining the full structure and formatting of the original documents.

This method delivered significantly better results. The AI was able to recognise and interpret document features such as headings, tables, figures, chemical formulae, and references. Feedback generated from this approach more closely resembled what a human marker might provide — clearer, more relevant, and better aligned to the structure of the original reports.

Scaling to Real Submissions

Following the promising results from the second method, we applied the approach to a live batch of Chemistry reports — approximately 220 in total.

Students were informed that AI would be used to support the generation of feedback, and advised to interpret the output in that context. The process, run from a single script, completed in under five minutes.

Observations

Strengths:

- Significant time savings: A manual review of 220 reports might take around 18 hours; the AI completed the task in minutes.

- Consistent tone and structure: Feedback was uniform across all submissions.

- Useful language-level feedback: Grammar and clarity issues were generally well-identified.

Limitations:

- Feedback lacked subject-specific depth: While useful at a surface level, the AI could not fully match the insights of a subject expert.

- Occasional file retrieval errors: A small number of submissions (~1%) failed due to issues in retrieving uploaded files.

- Not a fully automated workflow: The process was functional but required technical input to run, making it unsuitable (in its current form) for wider use.

Reflections and Opportunities

This trial showed that AI can play a meaningful role in supporting feedback generation for scientific work — especially in terms of speed and consistency. However, it also highlighted important areas for further development.

Areas for improvement

- Prompt engineering: The initial prompt used was deliberately simple. Introducing clearer instructions, expected feedback structure, and section-specific guidance could lead to higher-quality responses.

- Inclusion of exemplar material: Providing the AI with model responses could help it calibrate feedback more effectively.

- Response consistency: Running the same report multiple times occasionally produced different outputs. Improving consistency will be important if the approach is to be used more widely.

An interesting learning point was that some tasks we initially expected to solve through manual coding — such as identifying document structure — were handled more effectively when we allowed the AI to interpret the full document directly. This suggests a shift in thinking: rather than simplifying content for the AI, we may often get better results by letting it engage with the original material as-is.

Next Steps

This was very much a proof of concept — a script-based process, run locally, with clear strengths and clear limitations. But it demonstrated real potential.

Looking ahead, we’ll be exploring ways to improve the quality and reliability of the feedback, and considering how a more automated and scalable version of this process could be integrated into existing University systems.

We’ll continue sharing what we learn as we develop these ideas further.