In the world of higher education, marking essays is a fundamental yet time-consuming and often subjective process. But what if we could use generative artificial intelligence (GAI) to assist with this task? That’s what the IT Innovation Team have been exploring by making use of Azure OpenAI services – our private walled-garden instance of GPT.

What is prompt engineering?

Imagine you’re conversing with an intelligent assistant that has all the information you could ever need, but only gives you what you ask for in just the right way. This is the essence of prompt engineering. It’s the art of crafting questions or instructions to elicit specific, useful responses from GAI.

The quality of your prompt is crucial—it can make the difference between a useful response and something completely off the mark. Effective prompt engineering requires clear, specific instructions, a bit of patience, and some trial and error. Several established techniques can significantly enhance the quality of AI-generated outputs:

- Persona-Based Prompts: Instruct the AI to respond in a particular style or character, like an experienced professor, a critical reviewer, or a supportive mentor, to shape the tone and depth of responses.

- Example-Based Prompts: Provide examples to guide the AI’s response. Whether a single high-quality example (one-shot) or multiple examples (multi-shot), this technique helps AI grasp different aspects of the task and apply a more nuanced evaluation.

- Chain of Thought Prompts: Instead of asking for an immediate judgment, guide the AI to process information step-by-step, ensuring a thorough and well-justified assessment, similar to the detailed feedback a human grader might provide.

Combining these techniques, educators can enhance the AI’s ability to provide valuable feedback, improving the learning experience for students. Careful prompt design in unlocking AI’s full potential in education.

A proof of concept

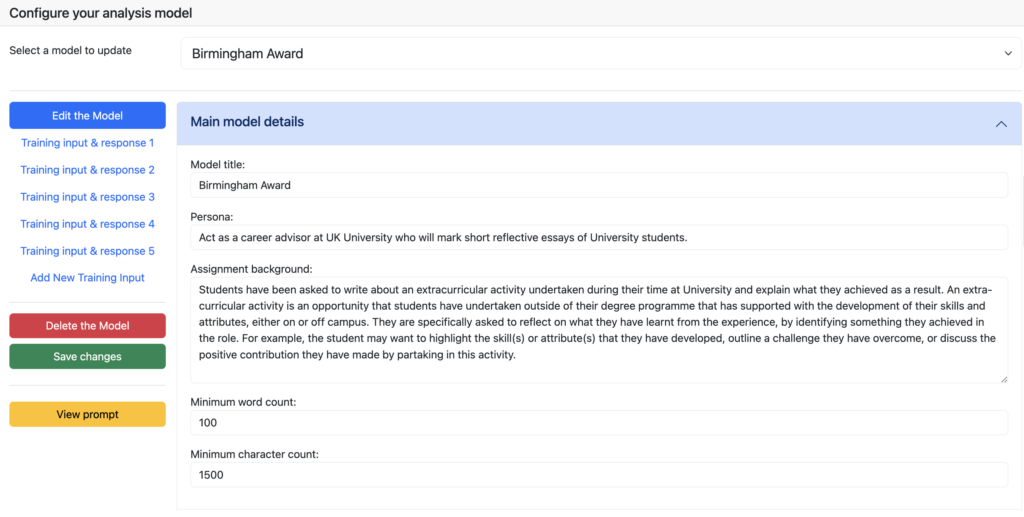

The Innovation Team, in collaboration with the Careers Network, is developing a proof of concept system to see if AI can lend a helping hand in assessing non-credit-bearing student reflection essays. Leveraging Azure OpenAI, we use carefully designed prompts to evaluate whether submissions meet specific marking criteria.

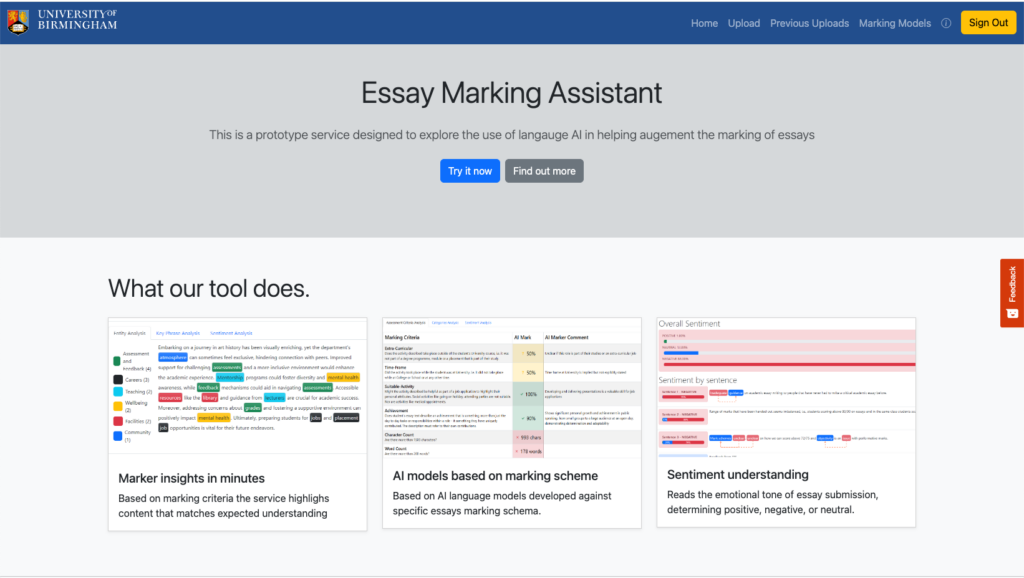

To boost reliability, users can provide sample essays and model responses – making use of the multi-shot prompt engineering technique. We also use Microsoft Language Studio to extract key details and analyse sentiment, giving a rich, contextual overview of each submission. This visual data helps assessors make more informed judgments.

While we’re still in the early stages, our tool shows promise. The generated output shows reasonable consistency across runs and, based on the current training data, generally makes reasonable assessments. Of course, it’s not without its quirks. Simple tasks like word counts can trip it up, and when faced with ambiguous scenarios, the AI tends to stick to black-and-white responses, rarely admitting that it doesn’t know. And why does AI brush off a stint at Nando’s as irrelevant to a student’s future career aspirations? We’re scratching our heads too.

What does the research say?

A couple of years ago, there was anticipation around GAI’s potential impact on essay marking. However, subsequent trials found marks from GPT to be inconsistent and unreliable. AI often lacked the depth and nuance of human assessment, resulting in poor overall correlation with human-marked essays.

More recent studies suggest there have been significant improvements but on closer examination weak correlations persist between AI-marked and human-marked essays. While good at assessing technical aspects like spelling, punctuation, and grammar elements, AI struggles to recognise and reward flair, creativity, and advanced academic skills.

Achieving reliable results often requires extensive training, with researchers needing to train on 80% of the data to mark the remaining 20%.

Despite progress, the jury is still out on whether prompt engineering alone can solve essay marking challenges. AI shows potential but isn’t yet the solution for this complex task.

What are the other challenges and risks?

Beyond prompt engineering, there are further hurdles to consider in utilising AI for essay assessment. The need for customised models for each essay, each requiring extensive training with relevant data, adds complexity and time to implement, potentially limiting scalability.

Moreover, given the considerable investment in tuition fees, students justifiably expect value for money, including access to high-quality academic assessments. The introduction of AI-driven grading may lead students to scrutinise the perceived value of their education, particularly if they question the reliability and fairness of grades generated by AI.

Additionally, educators may express reservations about relying solely on AI for grading, raising concerns about maintaining academic standards and the role of human judgment in assessments.

Navigating these challenges requires careful consideration and transparency. While GAI and prompt engineering hold promise for enhancing efficiency, overcoming these obstacles is essential for their integration into the academic community.

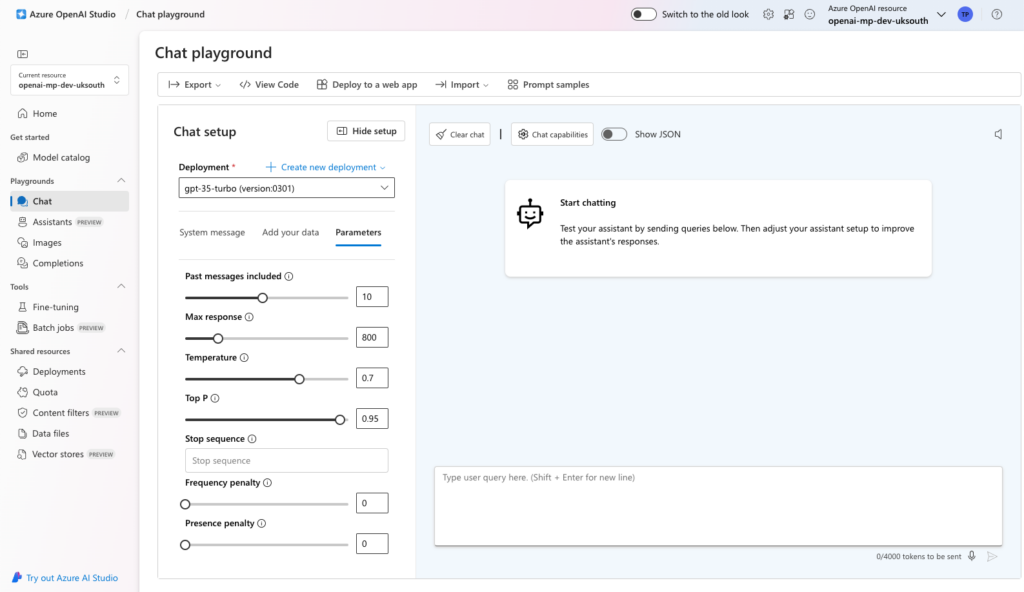

The technology

Open AI studio is an Azure Platform as a Service (PaaS) that provides the university with access to wall gardens instances of the popular OpenAI models, you can read more about the technology here https://azure.microsoft.com/en-gb/products/ai-services/openai-service.

In short, the service enables the Innovation teams to integrate with powerful LLM (large language models) using APIs. The service allows the team to build simple but very powerful users experience around the ChatGPT technology stack, that go beyond the simpler chatbot experience traditional users of the GPT service experience.